The technological landscape of 2025 has witnessed a fundamental reorganisation of the artificial intelligence sector, transitioning from proprietary dominance to a new era of high-performance, open-source frameworks. Large Language Models (LLMs) have moved from experimental research novelties to essential business infrastructure, powering everything from customer service automation to complex data analysis. For modern enterprises, the question is no longer whether to adopt AI, but how to build a sovereign cognitive infrastructure that balances performance, cost, and data privacy.

1. The New Model Hierarchy: LLMs vs SLMs

Choosing the right model in 2025 requires matching specific business needs to the appropriate model scale.

- Large Language Models (LLMs): Powerhouses like GPT-4o, Claude Opus 4, and Gemini 2.5 offer billions of parameters for general-purpose versatility and complex reasoning across multiple domains.

- Small Language Models (SLMs): Efficient specialists like Mistral 7B and Phi-3 provide domain-specific precision and can run on standard hardware or edge devices, often reducing operational expenses by up to 70%.

2. The RAG Revolution: Moving Beyond “Frozen” Knowledge

Traditional foundation models are “closed-book” systems, with knowledge frozen at the time of their training. Retrieval-Augmented Generation (RAG) has emerged as the gold standard architecture to overcome this, enabling real-time access to an organization’s private, up-to-date data.

Key players in the 2025 RAG ecosystem include:

- Meilisearch: An intuitive search engine that blends keyword relevance with semantic recall for lightning-fast retrieval.

- LangChain: A modular framework for building complex, multi-step agentic workflows.

- LlamaIndex: A specialised data framework designed to connect LLMs with private data sources like SQL databases and PDFs.

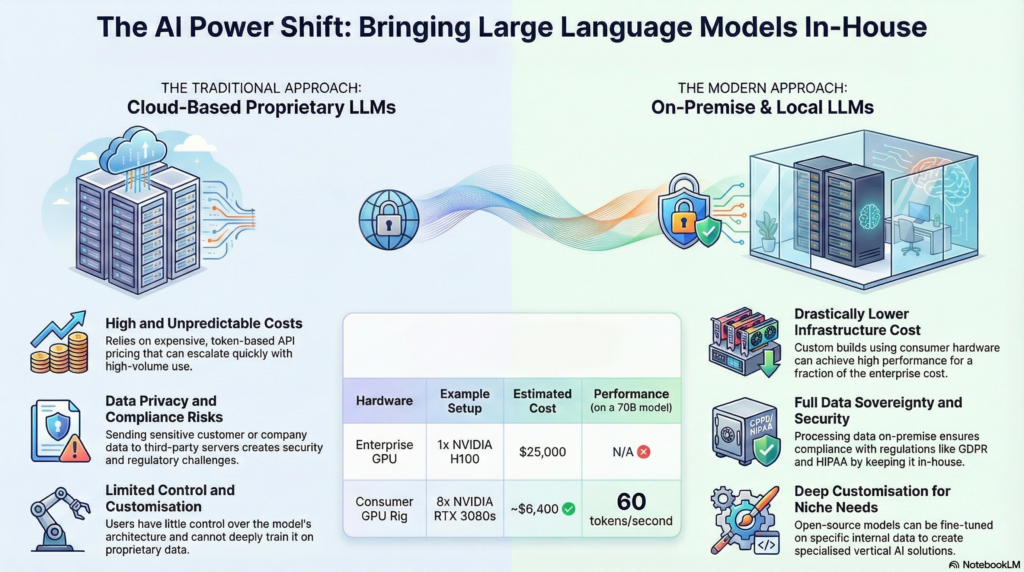

3. The Economic Crossover: API vs Self-Hosted

The choice between proprietary APIs and self-hosted models is a transition from Operational Expenditure (OpEx) to Capital Expenditure (CapEx). While APIs offer a low barrier to entry with zero upfront costs, they introduce linear scaling costs that can become a burden for high-volume operations.

For businesses processing millions of tokens daily, the crossover point where self-hosting becomes more economical than proprietary APIs typically occurs within 6 to 18 months. Hardware like NVIDIA RTX 4090 arrays or AMD EPYC servers allows enterprises to build production-ready AI systems for under $10,000, achieving stable inference speeds of up to 60 tokens per second for models like DeepSeek-R1.

4. Industry Transformation in Action

LLMs are delivering measurable ROI across high-impact sectors:

- E-Commerce: AI-powered search interprets natural language intent, leading to 25% higher customer satisfaction and engagement rates.

- Healthcare: Hospitals are using local LLMs to convert doctor voice notes into structured clinical documentation, ensuring HIPAA compliance by keeping sensitive data within the facility’s secure perimeter.

- Finance: Localised models enable real-time fraud detection and risk assessment without exposing transaction data to third-party APIs.

5. Data Sovereignty and the Future of Agents

In an era of heighted regulation like the EU AI Act, data sovereignty is a primary driver for open-source adoption. Open-source models allow for “air-gapped” deployments, ensuring that sensitive intellectual property never leaves the business’s secure environment.

Looking toward 2026, the paradigm is shifting from monolithic systems to “Agentic Swarms”—groups of highly specialised, smaller models that collaborate independently to complete complex tasks. This “intelligence-per-watt” optimisation makes high-end AI increasingly viable for small and mid-sized enterprises.

Analogy for Understanding Enterprise AI: Deploying AI in 2025 is like Building an Enterprise Library. A Proprietary API is like a high-end subscription to a global digital library—easy to access but with a monthly fee that grows the more you read. Self-hosting an Open-Source Model with RAG is like building your own private library on-site. You pay for the building and the shelves (Hardware/CapEx), but you can fill it with your own unique files (Private Data), and once built, your employees can read and learn as much as they want without the cost ever increasing.